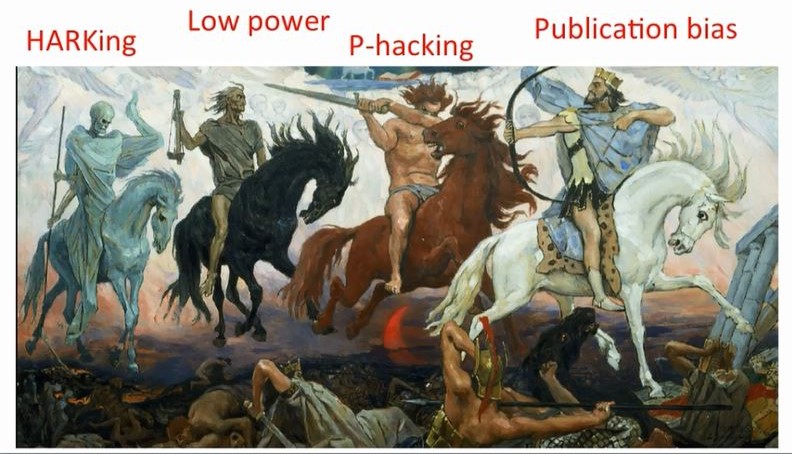

They ride in at dawn while the world quietly slumbers, cocooned in innocence, unsuspecting of the storm that is already under way. Immensely powerful, they gallop in bringing chaos. They are the four horsemen of the reproducibility apocalypse: HARKing, low power, p-hacking and publication bias. Who will resist and who will succumb? Who will be saved, and who will go down?

In her talk The Why and How of Reproducible Science, Dorothy Bishop discussed the main forces behind the reproducibility crisis as well as several steps towards a solution. From the talk it appears we have been taught all wrong, because the research practices that have led to the unreliability of published scientific results are also the practices that are routinely present in the way we work. Here are the horsemen, one by one.

HARKing (Hypothesizing After Results are Known)

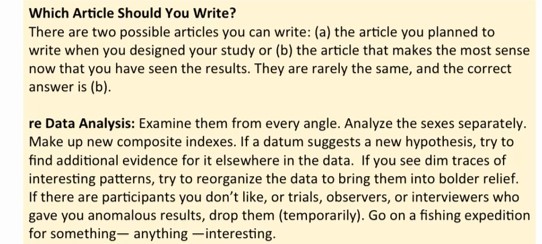

In The Compleat Academic – a book the American Psychological Association describes as one that gives insider advice on the implicit rules of academic life in the social sciences – Daryl Bem writes:

What is the problem with fishing expeditions? If statistical tests were a straightforward bridge between data and knowledge, and if our data were impeccably measured and perfectly representative of population dynamics, there would be no problem. We would simply be fishing out a truth. Such a finding would be as trustworthy as findings that are strongly theoretically driven and hypothesized prior to observing the data.

The reality of research is far more imprecise: fishing expedition findings will rarely replicate. If such exploratory findings are retrospectively described as confirmatory, the literature becomes riddled with untrustworthy results. In addition, pretending to have hypothesized the finding all along means that readers do not get sufficient information to adequately calibrate their levels of confidence in such findings.

Low power

Sampling is a tricky business. People can differ quite a lot in the expression of the experimental effect, and we want to make sure we are capturing this well in our sample. The smaller the sample, the greater the chance that we will have an inadequate representation of what’s going on. This will be reflected in our conclusions, which often won’t replicate.

In fact, even if the effect is there, even if we captured it correctly the first time around, chances are we won’t be able to find it again with a new small sample. Our sample sizes should reflect the degree of messiness of the effect we are measuring.

P-hacking

You have a hypothesis, but it didn’t pan out. But wait a minute. Perhaps it’s present in women, but not in men? Perhaps it’s particularly strong in left-handed women who play the flute and were raised in the suburbs by a single mother who is also a twin? Perhaps we can retrospectively come up with a theory to make this look sensible, but the truth is, it is most likely a chance finding. If we don’t place such a finding in the context of all the other statistical tests we ran, its perceived importance becomes unjustifiably inflated.

Publication bias

Imagine you’re an editor of a top neuroscience journal and someone sends in an article showing, on 20 people, that playing computer games decreases dyslexia. It would be great if it were true – a simple solution to a problem with great social ramifications. Would you recommend this article for publication? What about if the article found that playing computer games does not make a difference to dyslexic people, also measured on 20 participants? The first finding is much more likely to get recommended for publication. It is more newsworthy – but is it better science?

Now imagine that the second study was done on 200 people instead of 20. It is surely more trustworthy than either of the previous two, but still has a lower chance of being accepted in a top journal than the positive finding. The first finding, however, has a lower chance of being replicable. Because of this prejudice against null findings, the literature as a whole becomes an untrustworthy source of knowledge about experimental effects.

Now what?

Now that the four horsemen of the reproducibility apocalypse are here, the only way forward is to tame their beasts. In Dorothy’s talk, you can hear how this can be done by using data simulations, separating exploratory from confirmatory samples, calculating power analyses prior to data collection, and pre-registering hypotheses and data analysis plans. Click below to listen to her describe the forces behind the reproducibility crises in greater depth, using clear-cut examples that will make you stop and think:

(This blog post is a preview of the themes in Dorothy Bishop’s talk at the Autumn School in Cognitive Neuroscience, held on September 28, 2017. I occasionally use my own words to describe the contents, but the presented ideas do not deviate from the talk.)